Social Interaction in Naturalistic Verbal Contexts

Face-to-face communication has unique neural features

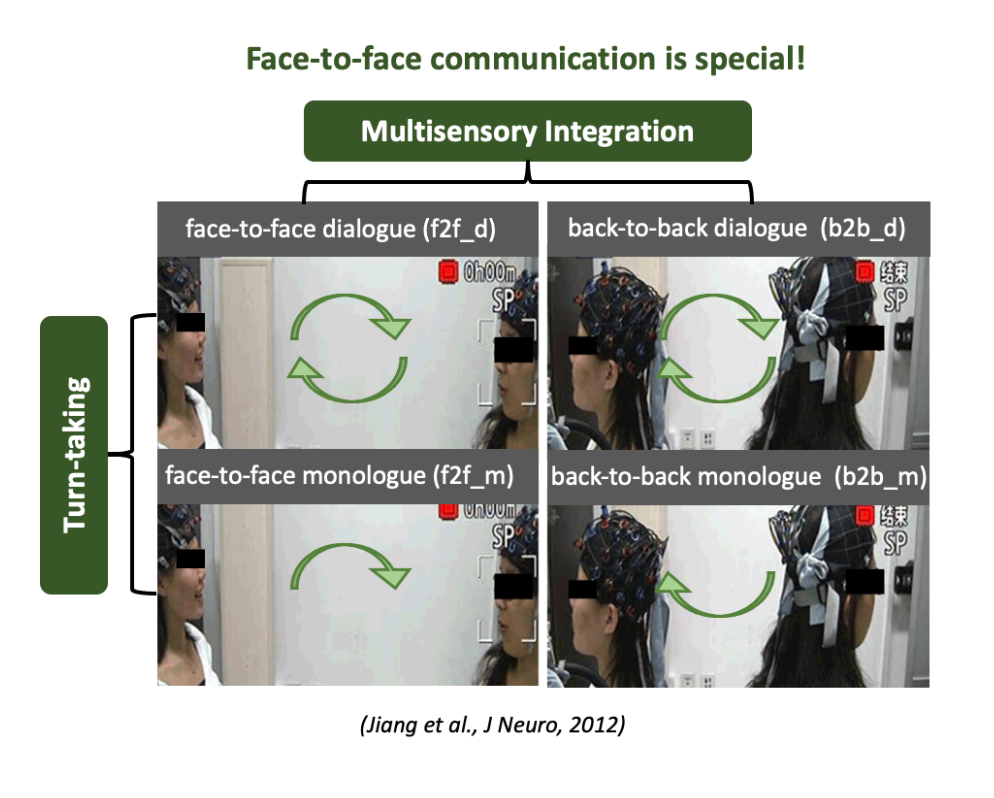

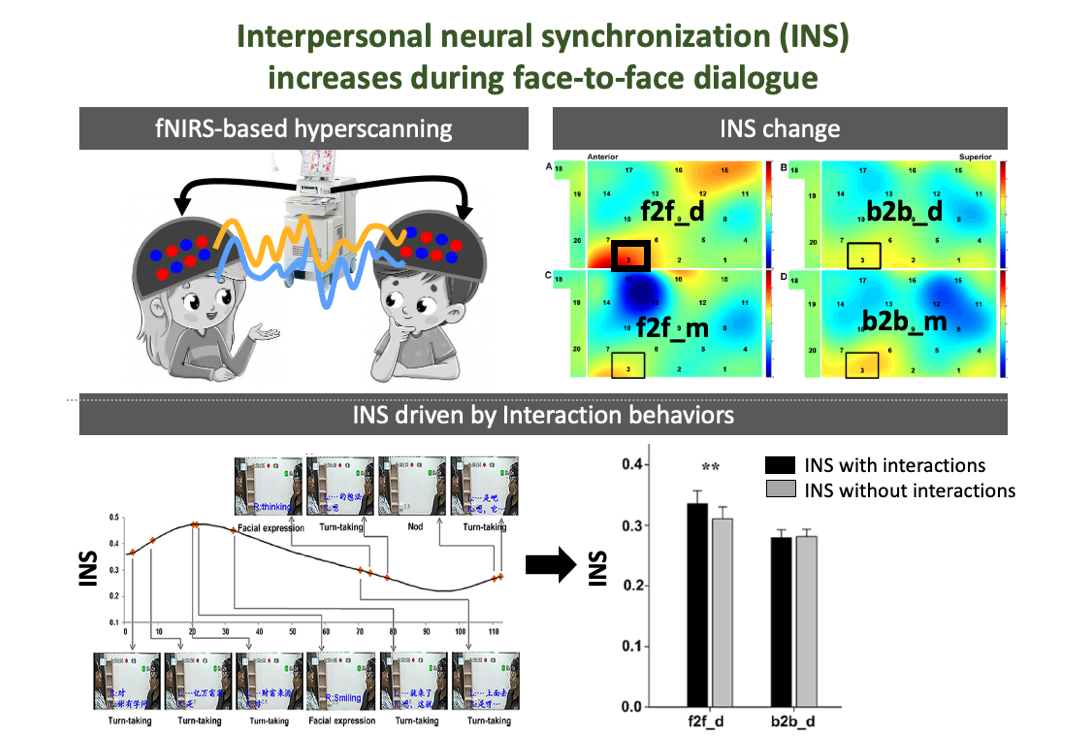

Although the human brain may have evolutionarily adapted to face-to-face communication, other ways of communication, e.g., telephone and e-mail, now increasingly dominate our daily social interaction. However, the unique neural features of face-to-face communication relative to other types of communications remain unknown. We developed a functional near-infrared spectroscopy (fNIRS)-based hyperscanning to simultaneously measure activities from two communicating brains. We identified a significant interpersonal neural synchronization (INS) increase in the inferior frontal cortex during a face-to-face dialogue between communicating partners but not during other conditions (i.e., face-to-face monologue, back-to-back dialogue, and back-to-back monologue). Moreover, this INS increase resulted primarily from the multisensory integration such as facial expressions during the face-to-face dialogue. These results suggest that face-to-face communication, particularly dialog, has special neural features that other types of communication do not have. This study also contributes a naturalistic approach (fNIRS-based hyperscanning) and a novel neuromarker (INS) to the field of social neuroscience, catalyzing a new wave of research focusing on how multiple brains interact with each other under diverse face-to-face interaction settings.

- Jiang J., Dai B., Peng D., Zhu C., Liu L., Lu C. (2012). Neural synchronization during face-to-face communication. Journal of Neuroscience, 32(45):16064 –16069. [Link]

|

|

Humans build relationships through interpersonal neural synchronization (INS)

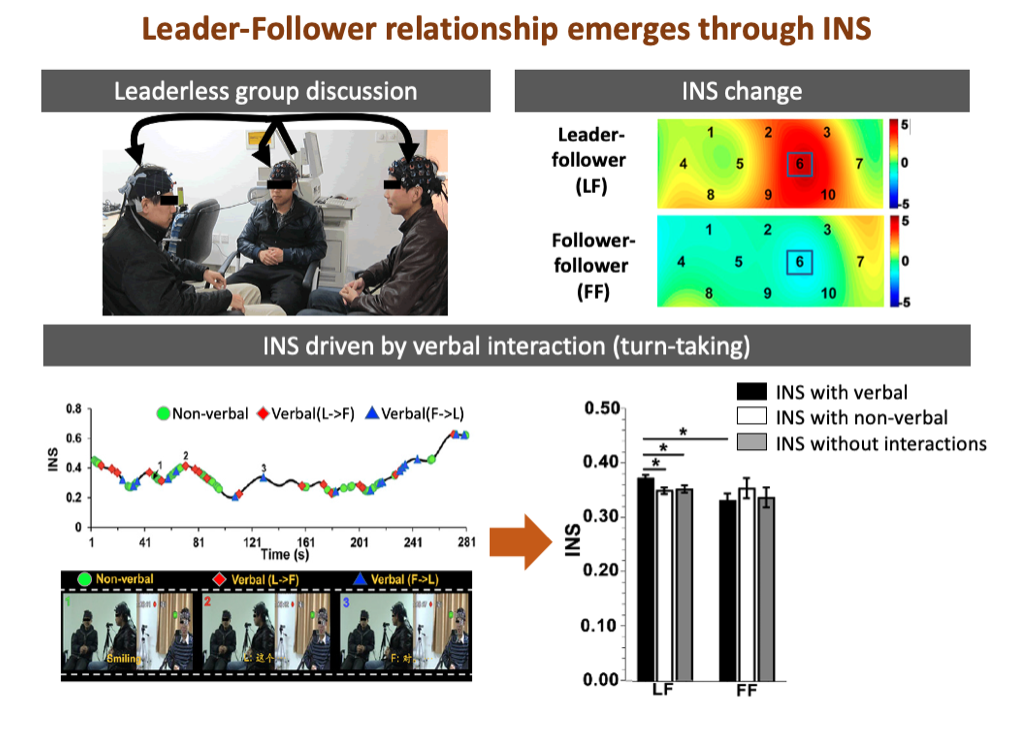

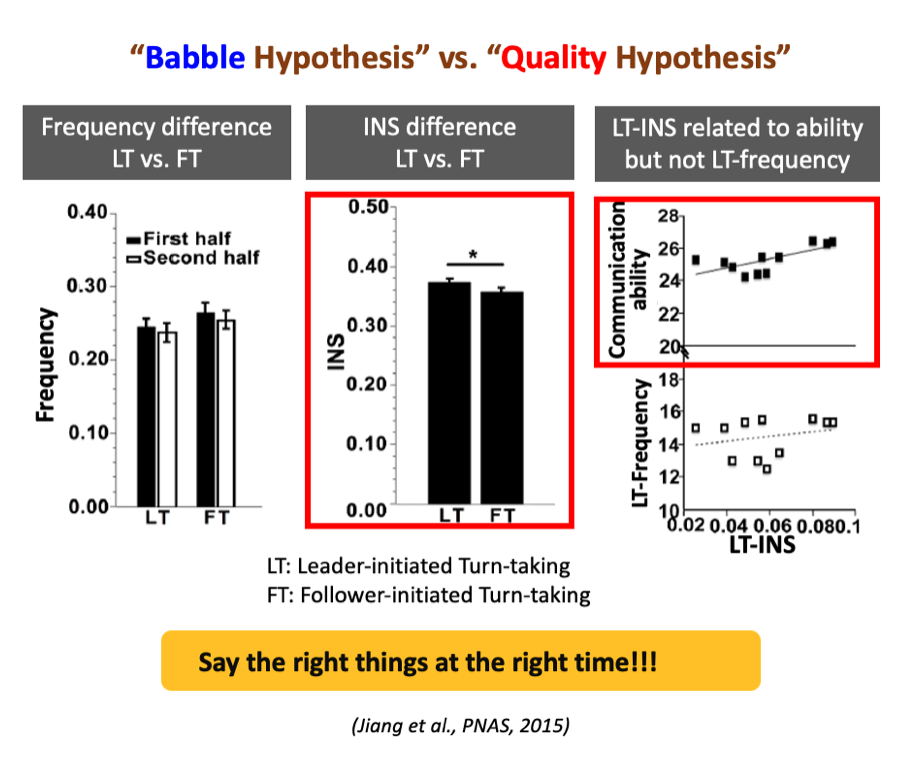

Human society consists of different interpersonal relationships. One important relationship in both evolutionary past and modern society is the leader-follower relationship. However, little is known about how the leader-follower relationship emerges through INS during social interaction. We leveraged fNIRS-based hyperscanning to simultaneously measure brain activities from three-person groups during a dynamic leaderless group discussion (LGD). We found a significantly higher INS in the left temporoparietal junction between leaders and followers than between followers. This difference was mainly driven by leader-initiated interactions and was related to leaders’ communication skills and competence, but not their communication frequency, in favor of the "communication quality hypothesis" in leader emergence. The INS even predicted when this relationship emerged along the course of LGD. These findings together suggest that humans may build new relationships with others by synchronizing their brain activities with others. Leader-follower relationship emerges because leaders are able to say the right things at the right time.

- Jiang J., Chen C., Dai B., Shi G., Liu L., Lu C. (2015). Leader emergence through interpersonal neural synchronization. Proceedings of the National Academy of Sciences of the United States of America, 112(14): 4274-4279. [Link]

|

|

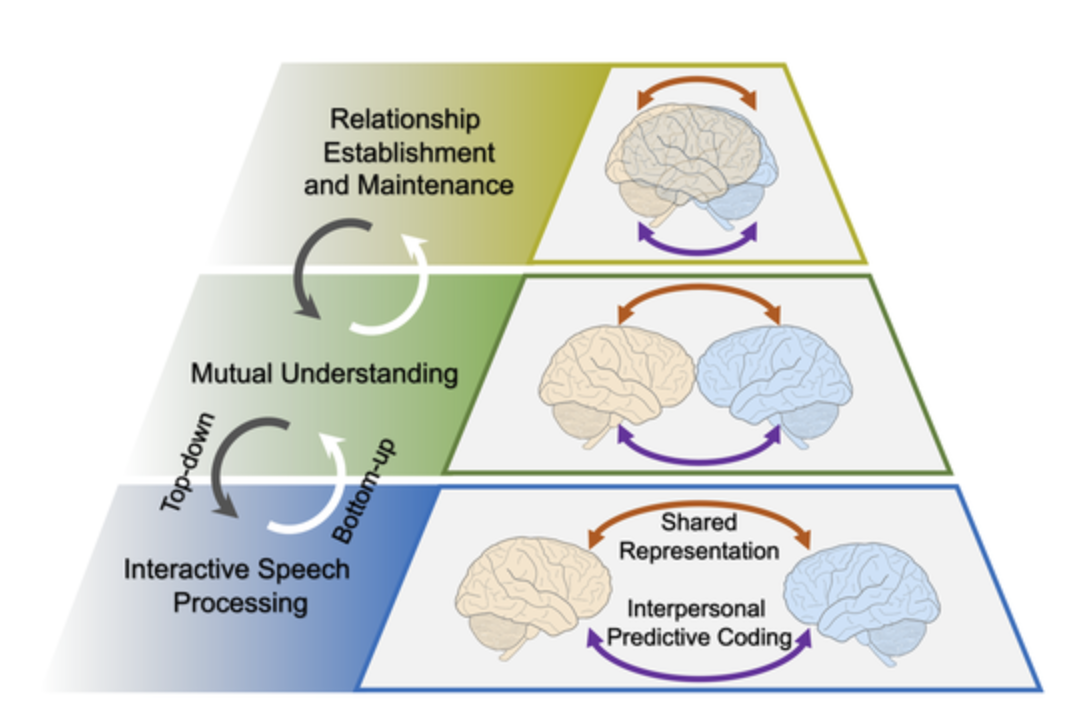

A hierarchical model for interpersonal verbal communication

The ability to use language makes us human. For decades, researchers have been racking their minds to understand the relation between language and the human brain. Nevertheless, most previous neuroscientific research has investigated this issue from a ‘single-brain’ perspective, thus neglecting the nature of interpersonal communication through language. With the development of modern hyperscanning techniques, researchers have begun probing the neurocognitive processes underlying interpersonal verbal communication and have examined the involvement of interpersonal neural synchronization (INS) in communication. However, in most cases, the neurocognitive processes underlying INS are obscure. To tentatively address this issue, we propose herein a hierarchical model based on the findings from a growing amount of hyperscanning research. We suggest that three levels of neurocognitive processes are primarily involved in interpersonal verbal communication and are closely associated with distinctive patterns of INS. Different levels of these processes modulate each other bidirectionally. Furthermore, we argued that two processes (shared representation and interpersonal predictive coding) might coexist and work together at each level to facilitate successful interpersonal verbal communication. We hope this model will inspire further innovative research in several directions within the fields of social and cognitive neuroscience.

- Jiang J., Zheng L., Lu C. (2021). A hierarchical model for interpersonal verbal communication. Social Cognitive and Affective Neuroscience, invited Special Issue, 16(1-2): 246-255. [Link]

Altered neural patterns reflect changes in non-verbal and verbal behaviors

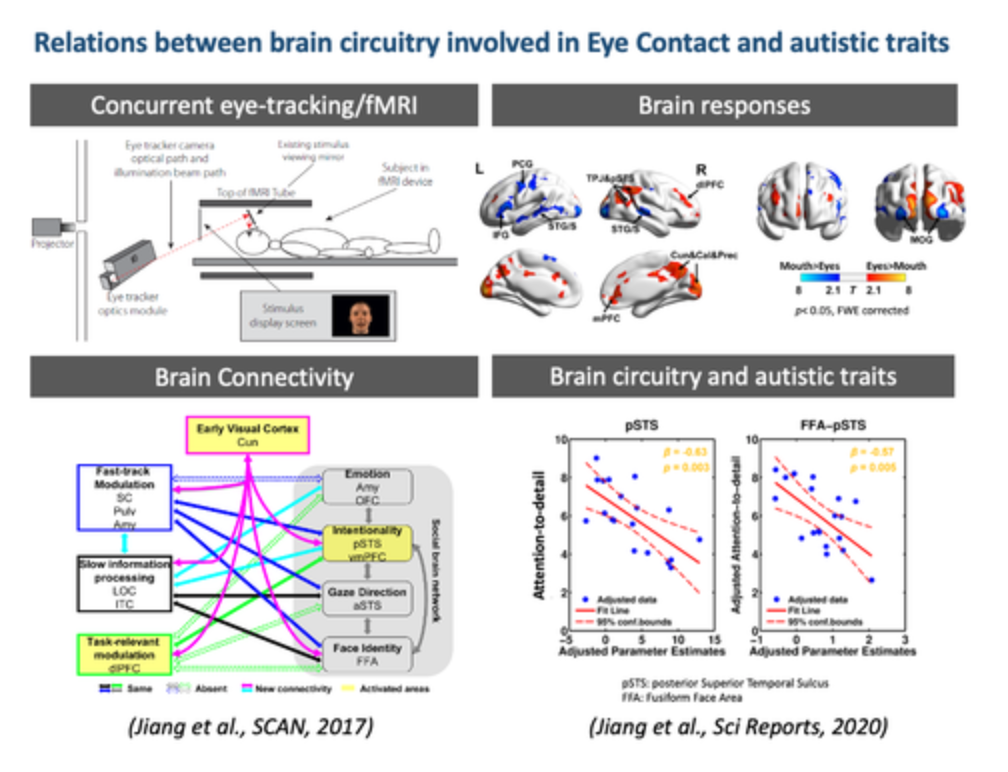

Eye contact is an important non-verbal cue for social interaction and occurs frequently and spontaneously in verbal communication. However, the brain circuitry underlying this behavior is unclear. We developed a novel paradigm to simulate eye contact in verbal communication. Using concurrent eye-tracking/fMRI, we simultaneously recorded participants’ eye movements and neural patterns while they were freely watching a pre-recorded speaker talking. We found an extensive network of brain regions showing specific responses and connectivity patterns to eye contact (Jiang et al., 2017, SCAN). Interestingly, the connectivity strength between brain areas involved in face processing was related to the level of autistic traits (Jiang et al., 2020, Scientific Reports).

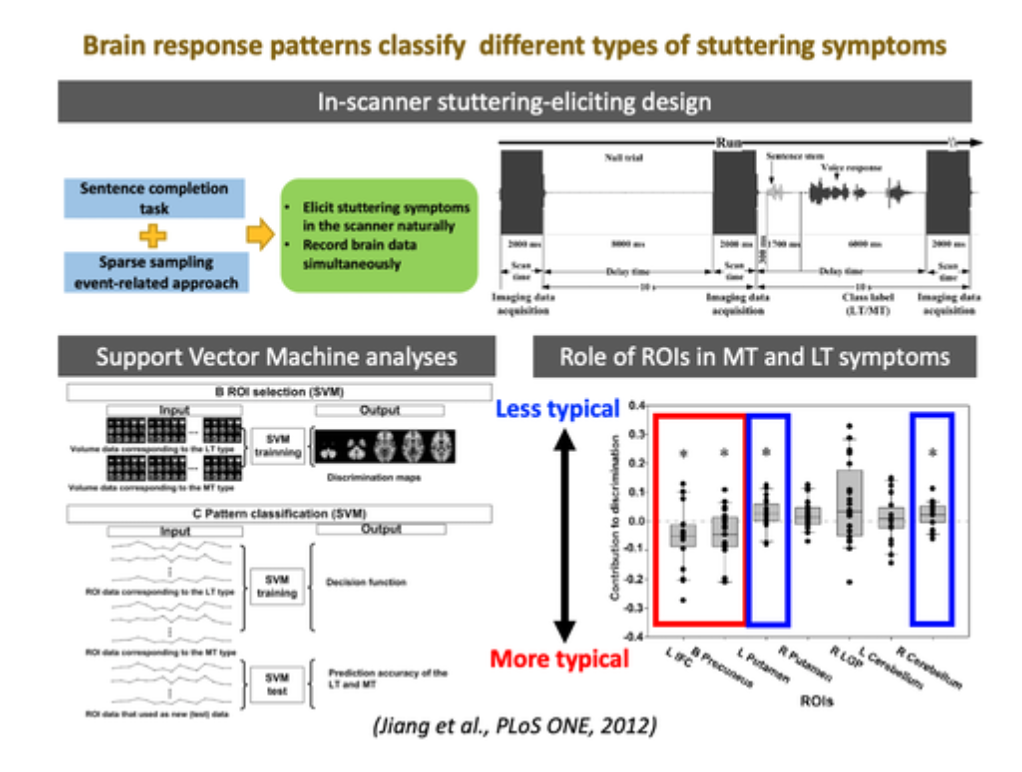

Like reduced eye contact, stuttering is a common communication difficulty that affects social interaction. Using a sparse-sampling fMRI paradigm, we designed a speech production task to naturally elicit patients’ stuttering symptoms in the scanner and simultaneously measure their brain responses to various stuttering symptoms. Unique patterns of altered brain activity were noted for different stuttering symptoms. The findings have important theoretical and clinical implications for patients with distinct dominated stuttering symptoms (Jiang et al., 2012, PLoS ONE).

- Jiang J., Borowiak K., Tudge L., Otto C., von Kriegstein K. (2017). Neural mechanisms of eye contact when listening to another person talking. Social Cognitive and Affective Neuroscience, 319–328. [Link]

- Jiang J., von Kriegstein K., Jiang J. (2020). Brain Mechanisms of Eye Contact during Verbal Communication Predict Autistic Traits in Neurotypical Individuals. Scientific Reports, 10 (1): 14602. [Link]

- Jiang J., Lu C., Peng D., Zhu C., Howell P. (2012). Classification of types of stuttering symptoms based on brain activity. PLoS ONE, 7(6): e39747. [Link]

|

|